For me at MTU, it was always about the hardware. I used my technical electives to

build the equivalent of a computer engineering minor along with my CS degree. Upon

graduating in 1990, I landed a job with IBM (Poughkeepsie, NY) writing performance

simulators (along with specialized high level HDL’s, tracing tools, and visualization

tools) for evaluating processor pipelines, cache hierarchies, and SMP structures for

IBM’s mainframes. Early on I was surrounded by brilliant “old-timers” in development

and research (even an ancient IBM Fellow who had once worked as John Von-Neumann’s

lab director). During those first few years I was a sponge, learning many things about

weighing trade-offs on the “what-should-be’s” of advanced processor architectures.

But after a few years, the mainframe died. Or so the pundits said. Massive lay-offs,

restructuring, a new CEO, and a diaspora of remaining talent to other parts of IBM.

Fast forward a few years and I got the opportunity to move to Austin, TX, in a new

organization bridging mainframe know-how with the growing new world of RISC processing

(IBM’s “Power” architecture). IBM was coming back and the mainframe was stabilizing.

But RISC was the new kid in town and the dot-com boom was happening there. IBM’s CEO

made a big bet, pushing Power RISC to the center of IBM’s e-business strategy. Several

competing Power processor projects got killed to consolidate to a new one: POWER4.

I was in the right place at the right time, as a processor performance architect,

driving and evaluating the ideas that formed the basis for POWER4.

Somewhere in the middle of POWER4 development, a few top architects who had taken

notice of me, pulled me out of my performance role to work directly in the microarchitecture/logic

area. This was no longer “what-should-be”. It was: how do you make “what-should-be”

a reality. Invent the hardware algorithms. Get them to functionally work. Get them

to be physically realizable in silicon. Understand all the economics of balancing

the constraints. Somehow, they forgot I was a computer scientist, not an engineer.

But I’ve always felt MTU prepared me for a broader set of challenges than just the

typical computer science stuff.

At this point in my career, I was already an established veteran in the performance

role, so taking on this new challenge was a huge risk. It paid off handsomely. By

combining expertise in “what-should-be” with expertise in the economics of making

“what-should-be” a reality, I rose to the top. By the time we built POWER6 (2002-2007),

I was chief architect for the whole SMP cache hierarchy, memory and I/O subsystems,

and chipset/system structure for IBM’s Power server processors. Working through my

team of 10 to 15 architect specialists and numerous execution leads and project managers,

I was directing the work of hundreds of people across several development sites.

By 2012 I was promoted to Distinguished Engineer, a nod to the accomplishments of

the prior decade. In 2016, I took my turn as the overall chip architect for the Power10

processor, adding processor core and management functions to my more localized scope

of expertise, and tripling the number of people I was directing. We released Power10

processors and systems in 2021, finishing them up during the pandemic, which added

a whole new kind of strain. I am still in the overall chip architect role for the

follow-ons to Power10 that are currently in development.

Summing it up, during my career, early on, I made minor contributions to some early

1990’s mainframes and the POWER3 RISC processor. Later, I made major contributions

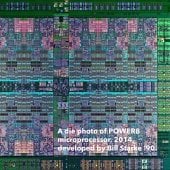

to POWER4 and POWER5. Then I was chief architect for cache, SMP, memory, and I/O systems

for POWER6, POWER7, POWER8, and POWER9; and overall chief architect for Power10 (silly

branding change went from upper case “POWER” to lower case “Power”). Through collaborations

with my counterparts in mainframe development, I’ve also made minor contributions

to several more recent mainframes, most recently Z16. Power systems and mainframe

systems are used behind the scenes for mission critical finance, insurance, medical,

retail, logistics, manufacturing, and government operations. Almost all the large

“household name” companies that people do business with, rely on Power systems for

their mission critical applications. Power supports three operating environments:

AIX (IBM’s high value UNIX), Linux, and IBM i (formerly known as AS/400, which evolved

from the S/38 and S/36 minicomputer platforms).

I’ve haven’t even touched on many of the adventures I’ve had while working on all

those processors: calling out VLIW as a dud before Intel and HP even started pursuing

the Itanium VLIW architecture; deep collaborations with Japanese engineers from Hitachi;

creating a specialized supercomputer for the DoD (it seemed like we were living a

science fiction novel); working with game console processors when IBM built them for

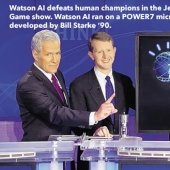

Playstation, Xbox, and Wii; building the computer that beat human Jeopardy game show

champions; partnering with Google, NVIDIA, Mellanox, Micron, and many more on numerous

hardware innovations; building the Summit and Sierra supercomputers (#1 and #2 on

the top 500 list); creating new memory and composability architectures; traveling

throughout the U.S., Europe, and east Asia, speaking at client conferences, launch

events, and training sessions. Any of these could be the basis for many more stories.

I feel very fortunate and I’d be nowhere without the great education I got at MTU,

education from mentors at IBM, and the opportunity to work with so many brilliant,

motivated, and kind teammates in IBM. As a student, this was the dream job I never

believed could be possible, then somehow it happened to me.