Objectives

- The automotive industry is rapidly adopting and developing technologies that allow vehicles to sense their environment and control the vehicle.

- Self-driving cars are being developed for consumers

- Changing safety systems from reacting to crashes (such as the use of air bags) to preventing crashes.

- These active safety systems are opening many opportunities for makers of machine vision systems

- AAA, as an advocate for automobile consumers, has partnered with Center for Automotive Research and Michigan Tech to develop material that explains the strengths, weaknesses, and complementarity of the sensors that enable these systems.

- An assessment of these systems is based on industry practices, and published literature,

and supplemented with an analysis of data collected from multiple sensors

- Metrics of performance are discussed

- Results to be published

- Measured data to be made available to the public

Introduction

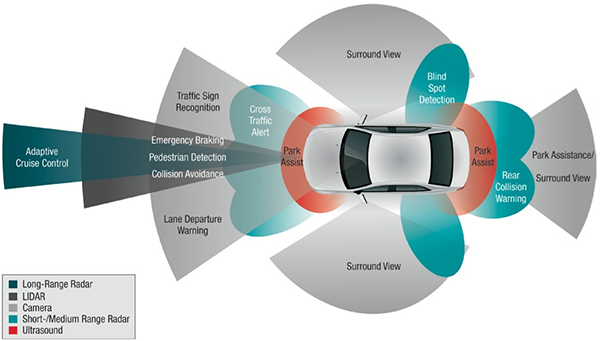

Driving automation systems rely heavily on a variety of sensors to model and respond to the driving environment. To date, various automakers, suppliers, and others have developed systems using one or more of the following sensor technologies:

- Cameras (monocular, stereo, infrared, or a mix of these)

- Radar (short range, long range, or both)

- Ultrasonics (i.e., Sonar)

- Lidar

Most automotive manufacturers (and other technology companies, such as Google) pursuing automated driving systems employ a mix of these technologies and adopt some approach to sensor fusion to arrive at situational awareness. The Audi A4 provides one example that is fairly typical of the driver assistance systems currently available on luxury vehicles, and its sensor array is shown in Figure 1, along with details on which functions are aided by which sensors. Notably, the Audi employs all four of the sensor types listed above, save for Lidar. No doubt, this is due in part to the relatively high cost of Lidar and to this being a driver assistance system, not a fully automated system along the lines of SAE levels 4 and 5.

When it comes to Level 4 and 5 systems, the industry has not yet developed a shared understanding of the necessary mix sensors that will be needed, and properly it never will, as automakers pursue different strategies based on performance, cost, market segment, etc. Perhaps most famously, the fleet of Google test vehicles prominently feature high-end Lidar systems as an important contributor of sensor information for object classification and other uses. Conversely, Elon Musk of Tesla has been adamant that he does not consider Lidar to be required for automated driving. Meanwhile, ongoing research and development has significantly lowered the costs of Lidar for automotive uses, and some suppliers now talk openly of being able to provide solid-state Lidar units in the near future costing less than $500 per unit.

These developments beg the question of what can Lidar add to detection and situational awareness that cannot be achieved by other sensors. If cost becomes less of a consideration, then performance and safety enhancement become paramount. With these issues in mind, AAA National approached researchers from the Center for Automotive Research (CAR) and the Michigan Tech Research Institute (MTRI, a unit of Michigan Technological University) with a request for a proposal related to comparing the performance of Lidar, RADAR, and cameras for enabling automated driving functions. CAR and MTRI have prepared this proposal in response to this request.

| Ultrasonic | Camera | RADAR | LIDAR | |

|---|---|---|---|---|

| Cost | Low | Low | Medium | High |

| Size | Small | Medium | Small-Medium | Medium-Large |

| Speed Detection | Low | Low | High | Medium |

| Sensitivity to Color | No | High | No | No |

| Robust to weather | High | Low | High | Medium |

| Robust to day and night | High | Low | High | High |

| Resolution | Low | High | Medium | High |

| Range | Short | Medium-long | Short, med, long | Long |

| The implications of cost, sensor robustness and range of operations vary across the sensor modes. | ||||